Key Takeaways

- Convergent validity is a type of criterion-related validity that demonstrates how well a measure correlates with other measures of the same construct.

- Convergent validity helps validate research findings by showing that different variables measuring the same concept are related in expected ways.

- Examples of convergent validity include the correlation between self-report and performance evaluations or survey responses and behavior observations.

What Is Convergent Validity?

Convergent validity, sometimes called congruent validity, is the extent to which responses on a test or instrument exhibit a strong relationship with responses on conceptually similar tests or instruments. Not only should a construct correlate with related variables, but it should not correlate with dissimilar and unrelated ones (Krabbe, 2017).

This, along with discriminant validity, is one of two faucets of construct validity (American Psychological Association.)

For a concrete example, a performance-based measure of swimming should be correlated to participants’ self-reported ability to swim across a pool.

In the domain of psychology, a researcher studying happiness may use a survey instrument to measure variables like satisfaction with life, frequency of positive emotions, and level of joy.

To demonstrate convergent validity for the survey instrument, the researcher might compare the responses to correlated measures such as optimism and contentment.

If the responses are similar on both tests — that is, they are strongly correlated — it suggests that the instrument is measuring the same construct and, therefore, has convergent validity (Krabbe, 2017).

Convergent validation can also be demonstrated by observing how different variables from a single test or device correlate with one another. For example, if two variables measure self-confidence on a survey — one asking about a response to a challenge and another asking about positive self-perception — they should be strongly correlated.

Convergent validity is useful because it can provide evidence for the reliability and accuracy of a given measure. It is an important consideration in establishing construct validity, especially when a researcher is using two different methods of data collection, like participant observation and surveys, to collect data.

Construct validity is a key component of research, providing evidence that the findings of a study are based on measurements that accurately reflect the construct being studied (Krabbe, 2017).

How to measure convergent validity

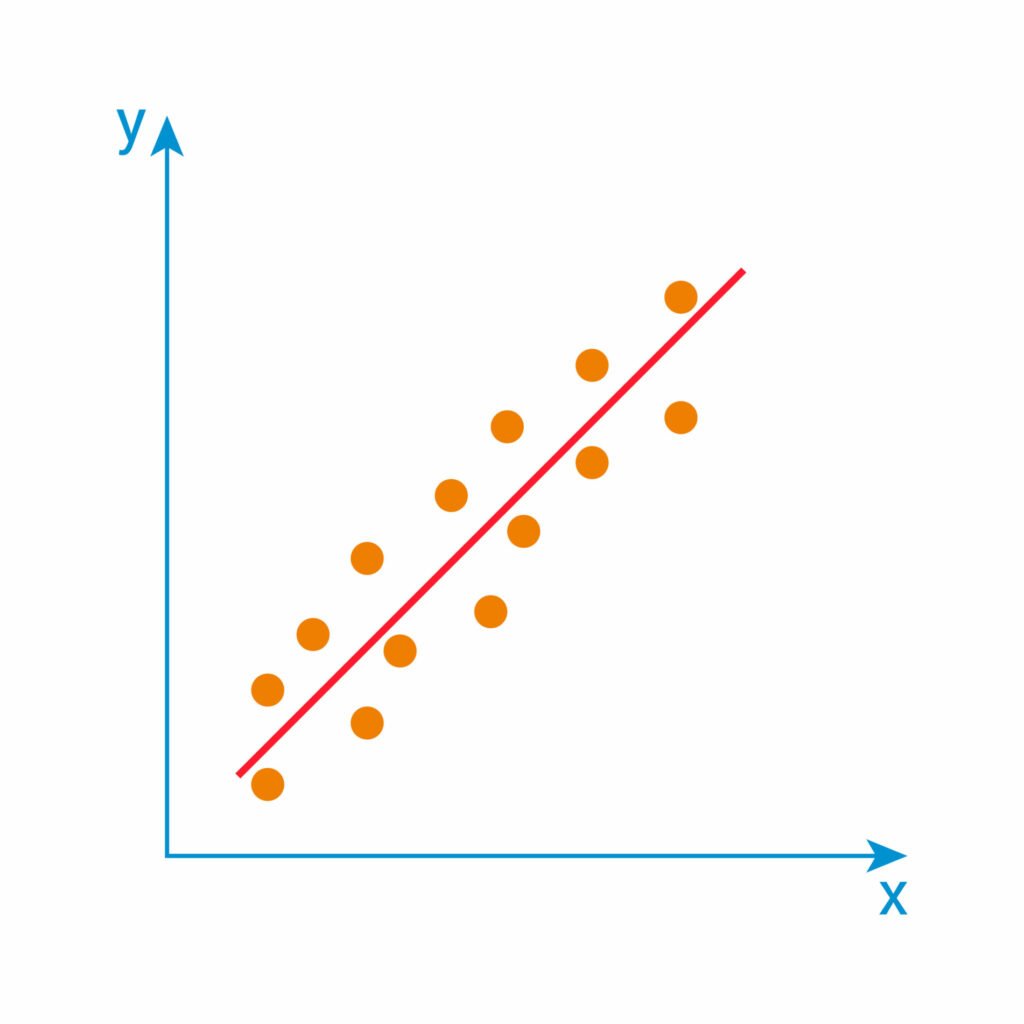

Convergent validity tests assume that the measures that they are studying are related to each other. It is measured by examining the correlation between two or more measures of the same construct.

In doing so, it determines whether different instruments are measuring the same concept in a comparable fashion. It is important to establish, however, that underlying this correlation is a causal one.

For example, Poole and Rosenthal (1991) measured the ideology of members of the United States Congress based on their roll call votes.

This was highly correlated with measures of party unity of the members on roll call votes. The most liberal and conservative members of the legislative parties tend to vote strongly with their party, while those in the ideological center may occasionally cross over and vote with the other side.

A test of concurrent validity would answer the question of whether these measures capture the same concept of ideology, if one causes the other, or if both are caused by the same factors (McDonald, 2005)

Mathematically, one way of measuring concurrent validity is through the Cronbach’s alpha test. This is the most commonly used measure of internal consistency, and it provides a measure of how well individual items in a given scale are correlated with each other (Cronbach, 1951).

The higher the value of Cronbach’s alpha, the better the convergent validity of the measures being tested. It should be noted, however, that this statistic does not provide information about the entire construct validity or predictive power of a measure (McDonald, 2005).

Other methods to assess convergent validity include using Pearson’s correlation coefficient and factor analysis. Pearson’s correlation coefficient is used to determine whether two or more variables are related to one another by measuring their level of agreement.

Factor analysis involves extracting factors from a set of observed data and measuring their importance in relation to each other. This method can be used to determine the underlying structure of a measure and whether it is measuring one construct or multiple constructs (McDonald, 2005).

Examples of Convergent Validity

Depression Questionnaires

If a psychologist is attempting to measure depression among a population using two different tests, they can examine how closely related the responses from those tests are to one another.

If they find that the results from both tests correlate strongly with one another, then convergent validity has been established; however, if there is no significant correlation between test results, then further investigation into why this lack of correspondence exists may be necessary (Krefetz et al., 2002).

IQ Tests

Researchers establish the concurrent validity of IQ tests by comparing the IQ test scores with other measures that assess related mental abilities.

For example, if a person is given an IQ test and then subsequently completes a test of verbal skills, researchers can compare the results of both tests to determine whether there is a correlation between the two sets of results.

In the case of Firmin et al. (2008), researchers evaluated the concurrent validity of IQ tests by correlating scores on an established IQ test – the Composite Intelligence Index – with their web-administered tests.

This type of comparison helps to show that the IQ test is measuring what it purports to measure – intelligence, thus helping to establish construct validity.

Education

Concurrent validity is important for establishing the efficacy of educational methods and practices. Specifically, the concurrent validity of an assessment measures the correlation between performance on a given task or test and real-world outcomes such as job performance or student achievement.

By examining this correlation, researchers can determine if assessments are capturing meaningful information about students’ knowledge, skills, and abilities.

For example, a teacher may evaluate the concurrent validity of their assessments by comparing the scores of students on their tests and their performance on different dimensions of a practical final project or internship related to the subject.

FAQs

Is convergent validity internal or external?

Convergent validity is an example of external validity, as it is concerned with the degree to which different measures of a given construct are associated.

This determines generalizability, applicability to practical situations in the world at large, and whether the results of the measure can be translated into other contexts.

What is the difference between convergent and discriminant validity?

Discriminant validity indicates that the results obtained by an instrument do not correlate too strongly with measurements of a similar but distinctive trait. For example, say that a company is sending potential software engineers tests to measure how proficient they are at coding.

A high score on the coding test should not correlate strongly with the scores of an IQ test, as this would just make the coding test another IQ test.

Convergent validity, on the other hand, indicates that a test correlates with a well-established test’s measures of the same construct. Both discriminant and convergent validity are important for measuring construct validity (Hubley & Zumbo, 2013).

What is the difference between convergent and divergent validity?

Divergent validity is yet another name used for discriminant validity and has even been used by some well-known writers in the measurement field (e.g., Nunnally & Bernstein, 1994), although it is not the commonly accepted term (Hubley & Zumbo, 2013).

Thus, the same differences exist between convergent and divergent validity and convergent and discriminant validity.

References

American Psychological Association. (2010). Standards for educational and psychological testing. Retrieved from http://www.apa.org/education/k12/testing-standards.pdf

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. psychometrika, 16(3), 297-334.

Firmin, Michael W., et al. “Evaluating the concurrent validity of three web-based IQ tests and the Reynolds Intellectual Assessment Scales (RIAS).” Eastern Education Journal 37.1 (2008): 20.

Hubley, A. M., & Zumbo, B. D. (2013). Psychometric characteristics of assessment procedures: An overview.

Krabbe, E. C. W. (2017). Validity in quantitative research: A practical guide to interpreting validity coefficients in scientific studies. Routledge Academic US Division: New York, NY 10017 USA. doi: 10.4324/9781315677620

Krefetz, D. G., Steer, R. A., Gulab, N. A., & Beck, A. T. (2002). Convergent validity of the Beck Depression Inventory-II with the Reynolds Adolescent Depression Scale in psychiatric inpatients. Journal of Personality Assessment, 78(3), 451-460.

MacDonald III, A. W., Goghari, V. M., Hicks, B. M., Flory, J. D., Carter, C. S., & Manuck, S. B. (2005). A convergent-divergent approach to context processing, general intellectual functioning, and the genetic liability to schizophrenia. Neuropsychology, 19(6), 814.

Nunnally, J. C., & Bernstein, I. H. (1994).

Psychometric theory (3rd ed.). New York: McGraw-Hill.

Poole, K. T., & Rosenthal, H. (1991). Patterns of congressional voting. American journal of political science, 228-278.